MULTIREGRESS Function (PV-WAVE Advantage)

Fits a multiple linear regression model using least squares and optionally compute summary statistics for the regression model.

Usage

result = MULTIREGRESS(x, y)

Input Parameters

x—Two-dimensional matrix containing the independent (explanatory) variables. The data value for the ith observation of the jth independent (explanatory) variable should be in element x(i, j).

y—Two-dimensional matrix containing of size N_ELEMENTS(x(*,0)) by n_dependent containing the dependent (response) variable(s). The ith column of y contains the ith dependent variable.

Returned Value

result—If keyword No_Intercept is not used, MULTIREGRESS is an array of length N_ELEMENTS (x(*, 0)) containing a least-squares solution for the regression coefficients. The estimated intercept is the initial component of the array.

Input Keywords

Double—If present and nonzero, double precision is used.

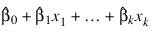

No_Intercept—If present and nonzero, the intercept term:

is omitted from the model. By default, the fitted value for observation i is:

where k is the number of independent variables.

Tolerance—Tolerance used in determining linear dependence. For MULTIGRESS, Tolerance = 100 × ε, where ε is machine precision (default).

Frequencies—One-dimensional array containing the frequency for each observation. Default: Frequencies(*) = 1

Weights—One-dimensional array containing the weight for each observation. Default: Weights(*) = 1

Predict_Info—Named variable into which the one-dimensional byte array containing information needed by MULTIPREDICT is stored. The data contained in this array is in an encrypted format and should not be altered before it is used in subsequent calls to MULTIPREDICT.

Output Keywords

Rank—Named variable into which the rank of the fitted model is stored.

Coef_Covariances—Named variable into which the m × m × n_dependent array containing estimated variances and covariances of the estimated regression coefficients is stored. Here, m is number of regression coefficients in the model. If No_Intercept is specified, m = N_ELEMENTS(x(0, *)); otherwise, m = (N_ELEMENTS(x(0, *)) + 1).

XMean—Named variable into which the array containing the estimated means of the independent variables is stored.

Residual—Variable into which the array containing the residuals is stored.

Anova_Table—Named variable into which the array containing the analysis of variance table is stored. Each column of

Anova_table corresponds to a dependent variable. The analysis of variance statistics are shown in

Analysis of Variance Statistics:

T_Tests—Named variable into which the NPAR (where NPAR is equal to the number of parameters in the model) by 4 array containing statistics relating to the regression coefficients is stored.

Each row corresponds to a coefficient in the model, where NPAR is the number of parameters in the model. Row i + INTCEP corresponds to the ith independent variable, where INTCEP is equal to 1 if an intercept is in the model and 0 otherwise, and i = 0, 1, 2, ..., NPAR – 1. The statistics in the columns are as follows:

0

—coefficient estimate

1

—estimated standard error of the coefficient estimate

2

—t-statistic for the test that the coefficient is 0

3

—p-value for the two-sided

t test

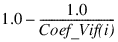

Coef_Vif—Named variable into which a one-dimensional array of length NPAR containing the variance inflation factor, where NPAR is the number of parameters, is stored. The (i + INTCEP)-th element corresponds to the ith independent variable, where i = 0, 1, 2, ..., NPAR – 1, and INTCEP is equal to 1 if an intercept is in the model and 0 otherwise. The square of the multiple correlation coefficient for the ith regressor after all others is obtained from Coef_Vif by the following formula:

If there is no intercept or there is an intercept and i = 0, the multiple correlation coefficient is not adjusted for the mean.

Discussion

Function MULTIREGRESS fits a multiple linear regression model with or without an intercept.

By default, the multiple linear regression model is:

yi = β0 + β1xi1 + β2xi2 + ... + βkxik + εi i = 0, 2, ..., n

where the observed values of the yi’s (input in y) are the responses or values of the dependent variable; the xi1’s, xi2’s, ..., xik’s (input in x) are the settings of the k independent variables; β0, β1, ..., βk are the regression coefficients whose estimated values are to be output by MULTIREGRESS; and the εi’s are independently distributed normal errors, each with mean zero and variance σ2. Here, n = (N_ELEMENTS(x(*, 0))). Note that by default, β0 is included in the model.

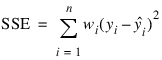

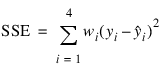

Function MULTIREGRESS computes estimates of the regression coefficients by minimizing the weighted sum of squares of the deviations of the observed response yi from the fitted response:

for the n observations. This weighted minimum sum of squares (the error sum of squares) is output as one of the analysis of variance statistics if Anova_Table is specified and is computed as shown below:

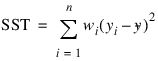

Another analysis of variance statistics is the total sum of squares. By default, the weighted total sum of squares is the weighted sum of squares of the deviations of yi from its mean:

the so-called corrected total sum of squares. This statistic is computed as follows:

When No_Intercept is specified, the total weighted sum of squares is the sum of squares of yi, the so called uncorrected total weighted sum of squares. This is computed as follows:

See Draper and Smith (1981) for a good general treatment of the multiple linear regression model, its analysis, and many examples.

In order to compute a least-squares solution, MULTIREGRESS performs an orthogonal reduction of the matrix of regressors to upper-triangular form. The reduction is based on one pass through the rows of the augmented matrix (x, y) using fast Givens transformations (Golub and Van Loan 1983, pp. 156–162; Gentleman 1974). This method has the advantage that it avoids the loss of accuracy that results from forming the crossproduct matrix used in the normal equations.

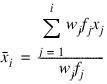

By default, the current means of the dependent and independent variables are used to internally center the data for improved accuracy. Let xj be a column vector containing the jth row of data for the independent variables. Let:

represent the mean vector for the independent variables given the data for rows 0, 1, ..., i. The current mean vector is defined to be:

where the wj’s and the fj’s are the weights and frequencies. The ith row of data has:

subtracted from it and is multiplied by:

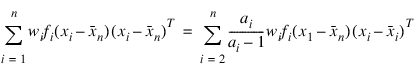

Although a crossproduct matrix is not computed, the validity of this centering operation can be seen from the formula below for the sum-of-squares and crossproducts matrix:

An orthogonal reduction on the centered matrix is computed. When the final computations are performed, the intercept estimate and the first row and column of the estimated covariance matrix of the estimated coefficients are updated (if Coef_Covariances is specified) to reflect the statistics for the original (uncentered) data. This means that the estimate of the intercept is for the uncentered data.

As part of the final computations, MULTIGRESS checks for linearly dependent regressors. In particular, linear dependence of the regressors is declared if any of the following three conditions is satisfied:

A regressor equals zero.

Two or more regressors are constant.

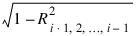

The expression:

is less than or equal to Tolerance. Here, Ri·1, 2, ..., i – 1 is the multiple correlation coefficient of the ith independent variable with the first i – 1 independent variables. If no intercept is in the model, the “multiple correlation” coefficient is computed without adjusting for the mean.

On completion of the final computations, if the ith regressor is declared to be linearly dependent upon the previous i – 1 regressors, then the ith coefficient estimate and all elements in the ith row and ith column of the estimated variance-covariance matrix of the estimated coefficients (if Coef_Covariances is specified) are set to zero. Finally, if a linear dependence is declared, an informational (error) message, code STAT_RANK_DEFICIENT, is issued indicating the model is not full rank.

Function MULTIREGRESS also can be used to compute summary statistics from a fitted general linear model. The model is y = Xβ + ε, where y is the n x 1 vector of responses, X is the n × p matrix of regressors, β is the p × 1 vector of regression coefficients, and ε is the n × 1vector of errors whose elements are each independently distributed with mean zero and variance σ2. Function MULTIREGRESS uses the results of this fit to compute summary statistics, including analysis of variance, sequential sum of squares, t tests, and an estimated variance-covariance matrix of the estimated regression coefficients.

Some generalizations of the general linear model are allowed. If the ith element of ε has variance of:

and the weights wi are used in the fit of the model, MULTIREGRESS produces summary statistics from the weighted least-squares fit. More generally, if the variance-covariance matrix of ε is σ2V, MULTIREGRESS can be used to produce summary statistics from the generalized least-squares fit. Function MULTIREGRESS can be used to perform a generalized least-squares fit by regressing y*on X* where y* = (T –1)Ty, X* = (T –1)TX and T satisfies TTT = V.

The sequential sum of squares for the ith regression parameter is given by:

The regression sum of squares is given by the sum of the sequential sum of squares. If an intercept is in the model, the regression sum of squares is adjusted for the mean, i.e.:

is not included in the sum.

The estimate of σ2 is s2 (stored in Anova_Table(7) that is computed as SSE/DFE.

If R is nonsingular, the estimated variance-covariance matrix of:

(stored in Coef_Covariances) is computed by s2R –1(R –1)T.

If R is singular, corresponding to rank(X) < p, a generalized inverse is used. For a matrix G to be a gi (i = 1, 2, 3, or 4) inverse of a matrix A, G must satisfy conditions j (for j ≤ i) for the Moore-Penrose inverse but generally must fail conditions k (for k > i). The four conditions for G to be a Moore-Penrose inverse of A are as follows:

1. AGA = A

2. GAG = G

3. AG is symmetric

4. GA is symmetric

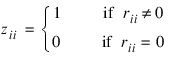

In the case where R is singular, the method for obtaining Coef_Covariances follows the discussion of Maindonald (1984, pp. 101–103). Let Z be the diagonal matrix with diagonal elements defined by the following:

Let G be the solution to RG = Z obtained by setting the ith ({i:rii = 0}) row of G to zero. Keyword Coef_Covariances is set to s2GGT. (G is a g3 inverse of R, represented by:

the result

is a symmetric g2 inverse of RTR = XTX. See Sallas and Lionti 1988.)

Note that keyword Coef_Covariances can be used only to get variances and covariances of estimable functions of the regression coefficients, i.e., nonestimable functions (linear combinations of the regression coefficients not in the space spanned by the nonzero rows of R) must not be used. See, for example, Maindonald (1984, pp. 166–168) for a discussion of estimable functions.

The estimated standard errors of the estimated regression coefficients (stored in Column 1 of T_Tests) are computed as square roots of the corresponding diagonal entries in Coef_Covariances.

For the case where an intercept is in the model, set:

equal to the matrix R with the first row and column deleted. Generally, the variance inflation factor (VIF) for the ith regression coefficient is computed as the product of the ith diagonal element of RTR and the ith diagonal element of its computed inverse. If an intercept is in the model, the VIF for those coefficients not corresponding to the intercept uses the diagonal elements of:

(see Maindonald 1984, p. 40).

Remarks

When R is nonsingular and comes from an unrestricted regression fit, Coef_Covariances is the estimated variance-covariance matrix of the estimated regression coefficients and Coef_Covariances = (SSE/DFE) (RTR)–1.

Otherwise, variances and covariances of estimable functions of the regression coefficients can be obtained using Coef_Covariances and Coef_Covariances = (SSE/DFE) (GDGT). Here, D is the diagonal matrix with diagonal elements equal to zero if the corresponding rows of R are restrictions and with diagonal elements equal to 1 otherwise. Also, G is a particular generalized inverse of R.

Example 1

A regression model:

yi = β 0 + β 1x i 1 + β 2x i 2 + β 3 x i 3 + ε i i = 1, 2, ..., 9

is fitted to data taken from Maindonald (1984, pp. 203–204).

; Set up the data.

RM, x, 9, 3

row 0: 7 5 6

row 1: 2 -1 6

row 2: 7 3 5

row 3: -3 1 4

row 4: 2 -1 0

row 5: 2 1 7

row 6: -3 -1 3

row 7: 2 1 1

row 8: 2 1 4

y = [7, -5, 6, 5, 5, -2, 0, 8, 3]

; Call MULTIREGRESS to compute the coefficients.

coefs = MULTIREGRESS(x, y)

; Output the results.

PM, coefs, Title = 'Least-Squares Coefficients', $

Format = '(f10.5)'

; This results in the following output:

; Least-Squares Coefficients

; 7.73333

; -0.20000

; 2.33333

; -1.66667

Example 2: Weighted Least-squares Fit

A weighted least-squares fit is computed using the model

yi = β0 + β1x i 1 + β2x i 2 + εi i = 1, 2, ..., 4

and weights 1/i2 discussed by Maindonald (1984, pp. 67–68).

In the example, Weights is specified. The minimum sum of squares for error in terms of the original untransformed regressors and responses for this weighted regression is:

where wi = 1/i2, represented in the C code as array w.

First, a procedure is defined to output the results, including the analysis of variance statistics.

PRO print_results, Coefs, Anova_Table

coef_labels = ['intercept', 'linear', 'quadratic']

PM, coef_labels, coefs, Title = $

'Least-Squares Polynomial Coefficients',$

Format = '(3a20, /,3f20.4, // )'

anova_labels = ['degrees of freedom for regression', $

'degrees of freedom for error', $

'total (corrected) degrees of freedom', $

'sum of squares for regression', $

'sum of squares for error', $

'total (corrected) sum of squares', $

'regression mean square', $

'error mean square', 'F-statistic', $

'p-value', 'R-squared (in percent)', $

'adjusted R-squared (in percent)', $

'est. standard deviation of model error', $

'overall mean of y', $

'coefficient of variation (in percent)']

PM, '* * * Analysis of Variance * * * ', Format = '(a50, /)'

FOR i=0L, 14 DO PM, anova_labels(i), $

anova_table(i), Format = '(a40, f20.2)'

END

; Input the values for x.

RM, x, 4, 2

row 0: -2 0

row 1: -1 2

row 2: 2 5

row 3: 7 3

; Define the dependent variables.

y = [-3.0, 1.0, 2.0, 6.0]

weights = FLTARR(4)

; Define the weights and print them.

FOR i=0L, 3 DO weights(i) = 1/((i + 1.0)^2)

; PV-WAVE prints the following:

; PM, weights

; 1.00000

; 0.250000

; 0.111111

; 0.0625000

coefs = MULTIREGRESS(x, y, Weights = weights, $

Anova_Table = anova_table)

; Print results using the procedure defined above.

print_results, coefs, anova_table

This results in the following output:

Least-Squares Polynomial Coefficients

intercept linear quadratic

-1.4307 0.6581 0.7485

* * * Analysis of Variance * * *

degrees of freedom for regression 2.00

degrees of freedom for error 1.00

total (corrected) degrees of freedom 3.00

sum of squares for regression 7.68

sum of squares for error 1.01

total (corrected) sum of squares 8.69

regression mean square 3.84

error mean square 1.01

F-statistic 3.79

p-value 0.34

R-squared (in percent) 88.34

adjusted R-squared (in percent) 65.03

est. standard deviation of model error 1.01

overall mean of y -1.51

coefficient of variation (in percent) -66.55

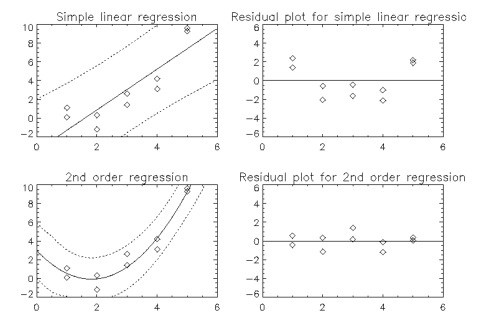

Example 3: Plotting Results

This example uses MULTIREGRESS to fit data with both simple linear regression and second order regression. The results, shown in

Plots of Fit, are plotted along with confidence bands and residual plots.

PRO MULTIREGRESS_ex

!P.Multi = [0, 2, 2]

x = [1.0, 1.0, 2.0, 2.0, 3.0, 3.0, 4.0, 4.0, 5.0, 5.0]

y = [1.1, 0.1, -1.2, 0.3, 1.4, 2.6, 3.1, 4.2, 9.3, 9.6]

z = FINDGEN(120)/20

; Perform a simple linear regression.

line = MAKE_ARRAY(120, Value = 0.0)

Coefs = MULTIREGRESS(x, y, Predict_Info = predict_info)

y_hat = MULTIPREDICT(predict_info, x, Residual = residual, $

Y = y)

y_hat = MULTIPREDICT(predict_info, z, Ci_Ptw_New_Samp = ci)

PLOT, x, y, Title = 'Simple linear regression', Psym = 4, $

XRange = [0.0, 6.0]

y2 = coefs(0) + coefs(1) * z

OPLOT, z, y2

OPLOT, z, ci(0, *), Linestyle = 1

OPLOT, z, ci(1, *), Linestyle = 1

PLOT, x, residual, psym = 4, Title = $

'Residual plot for simple linear regression', $

XRange = [0.0, 6.0], YRange = [-6, 6]

OPLOT, z, line

; Compute the second-order regression.

x2 = [[x], [x * x]]

coefs = MULTIREGRESS(x2, y, Predict_Info = predict_info)

y_hat = MULTIPREDICT(predict_info, x2, Residual = residual, $

Y = y)

y_hat = MULTIPREDICT(predict_info, $

[[z], [z * z]], Ci_Ptw_New_Sample = ci)

PLOT, x, y, Title = '2nd order regression',$

Psym = 4, XRange = [0.0, 6.0]

y2 = coefs(0) + coefs(1) * z + coefs(2) * z * z

OPLOT, z, y2

OPLOT, z, ci(0, *), Linestyle = 1

OPLOT, z, ci(1, *), Linestyle = 1

PLOT, x2, residual, Psym = 4, Title = $

'Residual plot for 2nd order regression', $

XRange = [0.0, 6.0], YRange = [-6, 6]

OPLOT, z, line

END

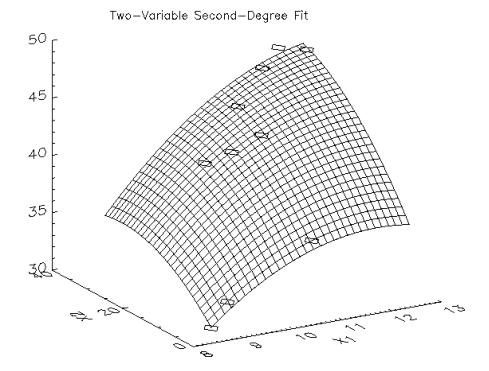

Example 4: Two-variable, Second-degree Fit

In this example, MULTIREGRESS is used to compute a two variable second-degree fit to data. The results are shown in

Two-variable, Second Degree Fit.

PRO MULTIREGRESS_ex

; Define the data.

x1 = FLTARR(10, 5)

x1(*, 0) = [8.5, 8.9, 10.6, 10.2, 9.8, $

10.8, 11.6, 12.0, 12.5, 10.9]

x1(*, 1) = [2, 3, 3, 20, 22, 20, 31, 32, 31, 28]

x1(*, 2) = x1(*, 0) * x1(*, 1)

x1(*, 3) = x1(*, 0) * x1(*, 0)

x1(*, 4) = x1(*, 1) * x1(*, 1)

y = [30.9, 32.7, 36.7, 41.9, 40.9, 42.9, 46.3, 47.6, 47.2, $

44.0]

nxgrid = 30

nygrid = 30

; Setup vectors for surface plot. These will be (nxgrid x

; nygrid) elements each, evenly spaced over the range of the

; data in x1(*, 0) and x1(*, 1).

ax1 = min(x1(*, 0)) + (max(x1(*, 0)) - $

min(x1(*, 0))) * findgen(nxgrid)/(nxgrid - 1)

ax2 = min(x1(*, 1)) + (max(x1(*, 1)) - $

min(x1(*, 1))) * FINDGEN(nxgrid)/(nxgrid - 1)

; Compute regression coefficients.

coefs = MULTIREGRESS(x1, y, Residual = resid)

; Create two-dimensional array of evaluations of the

; regression model at points in grid established by ax1

; and ax2.

z = FLTARR(nxgrid, nygrid)

FOR i=0L, nxgrid - 1 DO BEGIN

FOR j=0L, nygrid-1 DO BEGIN

z(i,j) = Coefs(0) $

+ Coefs(1) * ax1(i) + Coefs(2) * ax2(j) $

+ Coefs(3) * ax1(i) * ax2(j) $

+ Coefs(4) * ax1(i)^2 $

+ Coefs(5) * ax2(j)^2

END

END

!P.Charsize = 2

; Plot the results.

SURFACE, z, ax1, ax2, /Save, XTitle = 'X1', YTitle = 'X2'

PLOTS, x1(*, 0), x1(*, 1), y, /T3d, Psym = 4, Symsize = 3

XYOUTS, .3, .9, /Normal, 'Two-Variable Second-Degree Fit'

END

Warning Errors

STAT_RANK_DEFICIENT—Model is not full rank. There is not a unique least-squares solution.

Version 2017.0

Copyright © 2017, Rogue Wave Software, Inc. All Rights Reserved.