Using IPLM Cache

Caching IPs within Perforce IPLM is done via IPLM Cache, a performance and data integrity optimization methodology deployed by Perforce. This section contains an overview of IPLM Cache, as well as commands to interact with IPLM Cache as a Perforce IPLM user.

IP caching

When Perforce IPLM builds a workspace, it places all the resources in the incoming IP Hierarchy into their specified directory locations in the workspace. If the files managed by an IP resource are populated directly into the workspace the IPV is said to be in 'local' mode. Deploying IPLM Cache provides a second option, to load the IPV in 'refer' mode in the central IPLM Cache directory. Instead of populating the IP managed files directly into the workspace, Perforce IPLM can optionally link to the IPV in the centralized IPLM Cache. IPLM Cache manages a central area that contains on disk (a network drive) copies of regularly used IPVs across various projects in a single location. Instead of spending time (and disk space) bringing in a local copy of the IP data for each IPV in the workspace Perforce IPLM can create a filesystem link from the IPV's local workspace directory to the central IPLM Cache.

Creating the filesystem link to already populated cached IPVs means Perforce IPLM can generate workspaces with massive amounts of data in seconds, effectively bypassing the slower DM populate. This provides a read only copy of the data in the IP Hierarchy, one or more IPVs can be switched from refer mode to local mode, bringing in an editable local copy of the desired data. Because it is typical for users to need to edit only certain portions of large data sets IPLM Cache ends up saving significant user time.

Many customers require significant milestones, verification, simulation, tapeout, etc. be conducted on refer mode data that is read-only to the user (or automated job) running the process. This avoids mistakes made accidentally editing local data.

IPLM Cache is not required to load Perforce IPLM workspaces, it is an enhancement that increases workspace capabilities.

IPLM Cache 1.9 added support for caching Subversion type IPVs as well as custom DMs that are configured to support IPLM Cache. Prior to IPLM Cache 1.9 only Perforce type IPVs could be loaded into IPLM Cache.

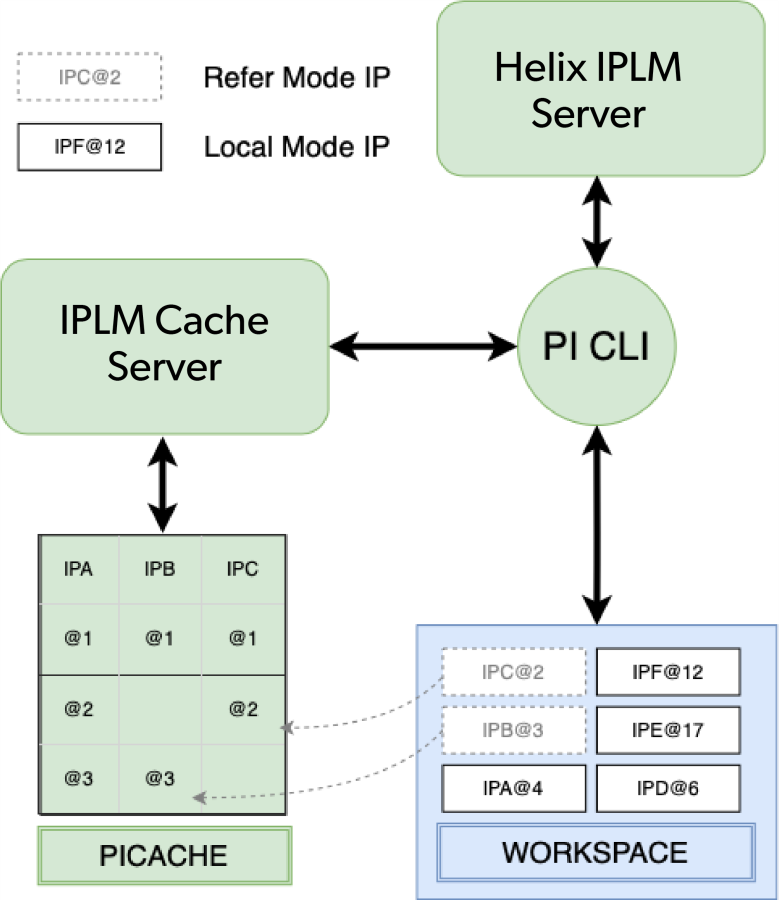

IPLM Cache architecture

IPLM Cache consists of an IPLM Cache Server that communicates with PiClient to manage cached data for user workspaces. An IPLM Cache server is typically deployed per customer site, and users interact with the cache indirectly, through PiClient. The IPLM Cache directory consists of a series of sub directories for Library, IP, Line, and IPV for each IPV in the cache. User workspaces are automatically configured with filesystem links to the correct IPVs in the cache.

IP modes and project properties

The '–mode' project property can be used to restrict an IPV resource in the workspace to only be allowed in local or refer mode. Refer to the Workspace Configuration section for more information on the --mode setting.

Enabling IPLM Cache

IPLM Cache is enabled by setting the MDX_PICACHE_SERVER environment variable to point to the site IPLM Cache server, or via settings in the picache.conf file. Refer to the Client Configuration section for more details.

@HEAD caching mode

@HEAD IPVs can be loaded into IPLM Cache just like fixed release IPVs. @HEAD are inherently dynamic, and will be updated to their latest file versions whenever ANY workspace that is linked to the IPV runs a workspace update. For this reason refer mode @HEAD IPVs can see their file contents change at any time, which may or may not be desirable depending on the application. Use STATIC_HEAD caching mode to prevent this. The ws update, ip load, or pi ip publish commands also update IPs @HEAD in the cache.

Updates of @HEAD IPVs in IPLM Cache are run by the local user account whose workspace initiates the update, rather than the user account that is running the IPLM Cache service. This is done as an optimization to reduce load on the IPLM Cache server. To support this optimization it is required that the user have DM level read (or greater) access to the IPV contents as well as Perforce IPLM level read (or greater) access.

Switching an IPV @HEAD between local and refer mode will not update the cached IPV contents, though the cached IPV @HEAD contents may be different than the local @HEAD contents if the cache or local workspace is not fully up to date.

STATIC_HEAD caching mode

By default, IPLM Cache loads @HEAD IPs into the cache in a shared “HEAD” directory. In the common case where multiple users are sharing the single cached version of IP@HEAD in the cache, update commands run by one user can change the content of the shared HEAD directory, which can change the contents of other user workspaces unexpectedly.

Using STATIC_HEAD caching mode

Use STATIC_HEAD mode to separate out the different @HEAD states into different cache directories that do not get updated. In STATIC_HEAD mode when an @HEAD IP is loaded, updated, or published into the cache, it is loaded into a shared HEAD_<REV> directory, where <REV> is a file level DM system identifier that uniquely identifies the @HEAD file content at the time the IP@HEAD was loaded into the cache.

If the contents of the IP@HEAD are then changed, and that IP@HEAD is loaded into the cache, a new directory HEAD_<NEW_REV> is created, and the previous HEAD_<REV> is not changed.

Any users whose workspaces point to the initial HEAD_<REV> link will not see any changes in their workspace for this IP until they run an update, thus avoiding uncontrolled workspace changes.When they do run an update that affects the IP@HEAD, the link to HEAD_<REV> will be updated to HEAD_<LATEST_REV>.

STATIC_HEAD details

P4 and SVN type IPs currently support STATIC_HEAD operation, but other IP dm_types, including DM Handler based DMs only support the default HEAD mode in the cache.

When an IP@HEAD is switched from the cache into the local workspace using the pi ip local command in STATIC_HEAD mode, the same file versions of the IP@HEAD loaded into the cache is populated into the workspace (no update will be performed, only the switch from refer to local mode). The IP@HEAD can be later updated to the latest @HEAD file versions.

See STATIC_HEAD caching mode administration for more details on STATIC_HEAD.

Working with IPVs in the cache

IPVs can be loaded into the cache on demand via workspace load or workspace update, or they can be populated directly with the pi ip publish command. IPVs are switched between local and refer mode with the pi ip local and pi ip refer commands respectively. If an IPV has not yet been populated into IPLM Cache.

, the first workspace that is loaded including that IPV will need to wait for it to be loaded into the cache. To pre-load the IPV before it its needed, use pi ip publish.

See IPLM Cache administration for information on setting up and administering IPLM Cache.

Switching IPVs to local mode

The 'pi ip local' command is used to move one or more IPVs from refer mode to local mode in a workspace.

pi ip local command

> pi ip local -h Usage: pi ip local [-h] [--all] [ip [ip ...]] Description: Create a local copy of an IP. An IP in a workspace can be in "refer" or "local" mode. In refer mode the IP is a link to the PI replication area. In local mode there is a local, editable copy of the IP. Positional arguments: ip The IP to switch to local mode. Optional arguments: --all, -a Switch all IP to local mode. -h, --help Show this help message and exit Examples: # Convert all IP in the workspace to local pi ip local --all # Convert the single IP lib1.ip1 to local pi ip local lib1.ip1

| Command option | Description |

|---|---|

| --all, -a | Switch all IPVs in the workspace to local mode |

Example output:

> pi ip local padring Switching 'tutorial.padring@10.TRUNK' to local mode.

Switching IPVs to refer mode

The 'pi ip refer' command is used to move one or more IPVs from local to refer mode in a workspace. Perforce IPLM blocks the switch to refer mode if there are unmodified files in the IPV directory (unless the --force option is provided) or if there are locally modified managed files in one of the IPVs to be switched (e.g. checked out and modified but not checked in).

Unload mode is a perforce IP specific function, the unload mode is specified as a number 0-2, with lower mode numbers resulting in higher performance, and higher unload mode numbers resulting in more careful and conservative checking and preservation of file modifications when an IP is unloaded from the workspace.

| Unload mode | Default | Description |

|---|---|---|

| 0 | Y | Block IP unload for p4 open files. This is a server only check and the quickest unload option. |

| 1 | N | Block IP unload open and modified files. Requires on disk checks so is slower, auto reverts open but unmodified files automatically. |

| 2 | N | Same as 1 but unopened modified files (edited without p4 edit) will be backed up to BACKUP_DIR piclient.conf directory. |

pi ip refer command

> pi ip refer -h Usage: pi ip refer [-h] [--force] [--all] [ip [ip ...]] Description: Switch an IP to refer mode. An IP in a workspace can be in "refer" or "local" mode. In refer mode the IP is a link to the PI replication area. In local mode there is a local, editable copy of the IP. Positional arguments: ip The IP to switch to refer mode. Optional arguments: --all, -a Switch all IP to refer mode. --force, -f Discard any unmanaged files in the local IP. --unload MODE Specify handling of IPVs that will be unloaded from the workspace. MODE is integer 0-2: 0 (default) - block unload for open files, 1 - block unload for open+modified files (unmodified files will be reverted), 2 - same as 1 but unopened modified files will be backed up to BACKUP_DIR. Higher mode number gives lower performance. -h, --help Show this help message and exit Examples: # Convert all IP in the workspace to refer mode pi ip refer --all # Convert the single IP lib1.ip1 to refer mode pi ip refer lib1.ip1

| Command Option | Description |

|---|---|

| --all, -a | Switch all IPVs in the workspace to local mode |

| --force, -f | Force switch to refer mode, even if there are local unmanaged files in the IPV |

Example Output:

> pi ip refer padring Switching 'tutorial.padring@10.TRUNK' to refer mode. Successfully switched 'tutorial.padring@10.TRUNK' to refer mode.

Open and modified files

Open and modified files block the switch to refer mode. These files must be checked in or reverted prior to moving the IPV to refer mode.

In the case of P4 IPs, open but not modified files will be automatically reverted. Files that were modified without opening are copied to a temporary directory.

Publishing IPVs to IPLM Cache

The 'pi ip publish' command can be used to publish IPVs directly to IPLM Cache without first loading them into a workspace via 'pi ip load'. This is a convenient method to pre-load IPVs to IPLM Cache, perhaps at the time of creation via a post-release hook, such that the user that first loads a workspace that includes the IPV doesn't have to wait for the IPV to load into the cache. IPs already loaded in the cache @HEAD can be updated to the latest @HEAD dm files by running a 'pi ip publish' against the IP.

pi ip publish command

> pi ip publish -h

Usage: pi ip publish [-h] [--hier] ipv_identifier

Description: Publish the given IPV to a IPLM Cache.

Positional arguments:

ipv_identifier The IPV to publish.

Optional arguments:

--hier, --hierarchy Publish all the IPV(s) in the ipv_identifier hierarchy.

-h, --help Show this help message and exit

| Command option | Description |

|---|---|

| --hier | Publish the ipv_identifier IPV as well as all of its hierarchy to IPLM Cache |

Refreshing cached IPVs from the workspace

If IPVs in IPLM Cache have been removed via the 'pi-admin picache remove' command or have been automatically cleaned up, it is possible to use the 'pi ws refresh' command to restore them on a workspace by workspace basis. See the Refreshing Workspaces section for details.