KOLMOGOROV2 Function (PV-WAVE Advantage)

Performs a Kolmogorov-Smirnov two-sample test.

Usage

result = KOLMORGOROV2(x, y)

Input Parameters

x—One-dimensional array containing the observations from sample one.

y—One-dimensional array containing the observations from sample two.

Returned Value

result—One-dimensional array of length 3 containing Z, p1, and p2 .

Input Keywords

Double—If present and nonzero, double precision is used.

Output Keywords

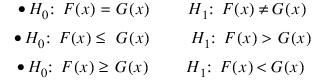

Differences—Named variable into which a one-dimensional array containing Dn , Dn+, Dn- is stored.

Nmissingx—Named variable into which the number of missing values in the x sample is stored.

Nmissingy—Named variable into which the number of missing values in the y sample is stored.

Discussion

Function KOLMOGOROV2 computes Kolmogorov-Smirnov two-sample test statistics for testing that two continuous cumulative distribution functions (CDF’s) are identical based upon two random samples. One- or two-sided alternatives are allowed. If n_observations_x = N_ELEMENTS(x) and n_observations_y = N_ELEMENTS(y), then the exact p-values are computed for the two-sided test when n_observations_x * n_observations_y is less than 104.

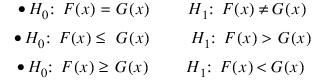

Let Fn(x) denote the empirical CDF in the X sample, let Gm(y) denote the empirical CDF in the Y sample, where n = n_observations_x − Nmissingx and m = n_observations_y − Nmissingy, and let the corresponding population distribution functions be denoted by F(x) and G(y), respectively. Then, the hypotheses tested by KOLMOGOROV2 are as follows:

The test statistics are given as follows:

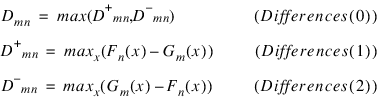

Asymptotically, the distribution of the statistic

(returned in result (0)) converges to a distribution given by Smirnov (1939).

Exact probabilities for the two-sided test are computed when m * n is less than or equal to 104, according to an algorithm given by Kim and Jennrich (1973;). When m * n is greater than 104, the very good approximations given by Kim and Jennrich are used to obtain the two-sided p-values. The one-sided probability is taken as one half the two-sided probability. This is a very good approximation when the p-value is small (say, less than 0.10) and not very good for large p-values.

Example

The following example illustrates the KOLMOGOROV2 routine with two randomly generated samples from a uniform(0,1) distribution. Since the two theoretical distributions are identical, we would not expect to reject the null hypothesis.

RANDOMOPT, set = 123457

x = RANDOM(100, /Uniform)

y = RANDOM(60, /Uniform)

stats = KOLMOGOROV2(x, y, Differences = d, Nmissingx = nmx, $

Nmissingy = nmy)

PRINT, 'D =', d(0)

; PV-WAVE prints: D = 0.180000

PRINT, 'D+ =', d(1)

; PV-WAVE prints: D+ = 0.180000

PRINT, 'D- =', d(2)

; PV-WAVE prints: D- = 0.0100001

PRINT, 'Z =', stats(0)

; PV-WAVE prints: Z = 1.10227

PRINT, 'Prob greater D one sided =', stats(1)

; PV-WAVE prints: Prob greater D one sided = 0.0720105

PRINT, 'Prob greater D two sided =', stats(2)

; PV-WAVE prints: Prob greater D two sided = 0.144021

PRINT, 'Missing X =', nmx

; PV-WAVE prints: Missing X = 0

PRINT, 'Missing Y =', nmy

; PV-WAVE prints: Missing Y = 0

Version 2017.0

Copyright © 2017, Rogue Wave Software, Inc. All Rights Reserved.