Introduction

This section introduces some of the mathematical concepts used with PV‑WAVE.

Unconstrained Minimization

The unconstrained minimization problem can be stated as follows:

where f : Rn → R is continuous and has derivatives of all orders required by the algorithms. The functions for unconstrained minimization are grouped into three categories: univariate functions, multivariate functions, and nonlinear least-squares functions.

For the univariate functions, it is assumed that the function is unimodal within the specified interval. For discussion on unimodality, see Brent (1973).

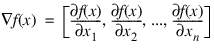

A quasi-Newton method is used for the multivariate function FMINV. The default is to use a finite-difference approximation of the gradient of f(x). Here, the gradient is defined to be the following vector:

However, when the exact gradient can be easily provided, the Grad keyword should be used.

The nonlinear least-squares function uses a modified Levenberg-Marquardt algorithm. The most common application of the function is the nonlinear data-fitting problem where the user is trying to fit the data with a nonlinear model.

These functions are designed to find only a local minimum point. However, a function may have many local minima. Try different initial points and intervals to obtain a better local solution.

Double-precision arithmetic is recommended for the functions when the user provides only the function values.

Linearly Constrained Minimization

The linearly constrained minimization problem can be stated as follows:

subject to:

A1x = b1

where f : Rn → R, A1 is a coefficient matrix and b1 is a vector. If f(x) is linear, then the problem is a linear programming problem; if f(x) is quadratic, the problem is a quadratic programming problem.

The

LINEAR_PROGRAMMING Function (PV-WAVE Advantage) uses an active set strategy to solve linear programming problems, and is intended as a replacement for the

LINPROG Function (PV-WAVE Advantage). The two functions have similar interfaces, which should help facilitate migration from the LINPROG Function to the LINEAR_PROGRAMMING Function. In general, the LINEAR_PROGRAMMING Function should be expected to perform more efficiently than the LINPROG Function. Both the LINEAR_PROGRAMMING Function and the LINPROG Function are intended for use with small- to medium-sized linear programming problems. No sparsity is assumed since the coefficients are stored in full matrix form.

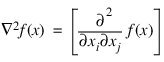

The QUADPROG function is designed to solve convex quadratic programming problems using a dual quadratic programming algorithm. If the given Hessian is not positive definite, then QUADPROG modifies it to be positive definite. In this case, output should be interpreted with care because the problem has been changed slightly. Here, the Hessian of f(x) is defined to be the n × n matrix as follows:

Nonlinearly Constrained Minimization

The nonlinearly constrained minimization problem can be stated as follows:

subject to:

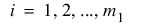

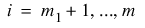

where f : Rn → R and gi : Rn → R for i = 1, 2, ..., m .

The routine CONSTRAINED_NLP uses a sequential equality constrained quadratic programming method. A more complete discussion of this algorithm can be found on

CONSTRAINED_NLP Function (PV-WAVE Advantage).

Return Values from User-Supplied Functions

All values returned by user-supplied functions must be valid real numbers. It is the user’s responsibility to check that the values returned by a user-supplied function do not contain NaN, infinity, or negative infinity values.

Version 2017.0

Copyright © 2017, Rogue Wave Software, Inc. All Rights Reserved.