Using multiple P4 Servers with IPLM

Perforce server configuration in Perforce IPLM

Perforce IPLM flexibly integrates with multiple P4 Servers. In many cases it is sufficient to specify a single P4 Server using the $P4PORT variable:

> env | grep P4PORT P4PORT=aus-p4commit:1666

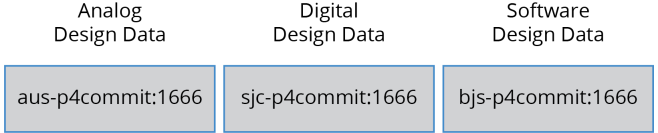

However, sometimes it is necessary to define multiple independent P4 commit servers. Geographical data distribution, security auditing, third party design collaboration, and, in extreme cases, data volume management can all be addressed with P4 data federation and multiple P4 commit servers.

Perforce IPLM can manage data from any number of independent P4 Servers in a single IP Hierarchy and populate the data into a single workspace.

Tip: When multiple P4 commit servers are used, the best practice is to always set the address of the IP's target commit server in the IP hostname field.

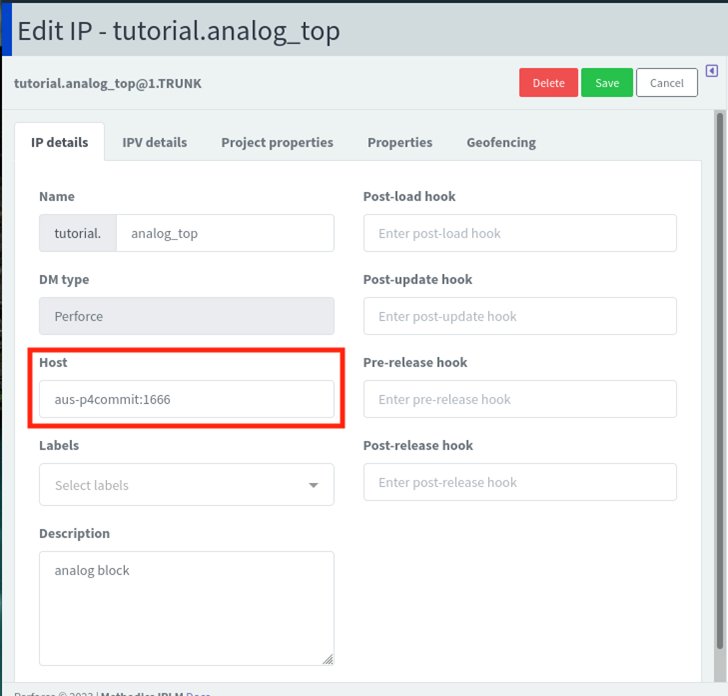

If a different commit server than the one specified in the $P4PORT variable should be used for a given IP, then set the correct P4 address in the IP's host field.

Setting the host field using the CLI (via pi ip add or pi ip edit):

[IP] # IP Permissions apply # Name is required name = analog_top # Read-only library = tutorial # Read-only dm_type = P4 # Host is used for p4 IP only host = aus-p4commit:1666 # Description is optional description = analog block

Or via IPLM Web using the Edit IP function:

Tip: The host field on dmtype p4 IPs is supported Perforce IPLM version 2.32 and later. For P4 streams DMHandler, currently the host field is invalid and is managed in a special IP property. Refer to P4 streams documentation for details.

Building a Workspace from multiple Perforce P4 Servers

When building an IPLM workspace with any IPs that contain a host value, the host field value is used instead of the default client env variable, $P4PORT. The host field value is set using a .p4config file in the IP directory. The IP Hierarchy workspace is built correct-by-construction using pi ip load.

If the client env variable $P4PORT is present, its value will always be used in the shared client, even if the top-level IP has a host variable defined.

If the top_level IP also has a host value defined but it differs from $P4PORT, a separate client is created or it will be included as part of the shared client if the host value matches.

IPs with host matching the primary server or with host not set are built using the main Helix client which is associated with the primary server.

Any IP with a host value set that does not match the primary server is built with a dedicated Helix client and a P4CONFIG file is placed in the IP directory:

> cd <wsdir>/blocks/t1 > ls -al total 28 drwxr-x--- 6 root root 4096 Aug 7 10:50 . drwxrwxr-x 33 root root 4096 Aug 7 10:50 .. drwxrwxr-x 4 root root 4096 Aug 7 10:50 doc drwxrwxr-x 6 root root 4096 Aug 7 10:50 hw_code -rw-rw-r-- 1 root root 69 Aug 7 10:50 .p4config drwxrwxr-x 4 root root 4096 Aug 7 10:50 sw_code > cat .p4config P4PORT=sjc-p4commit:1666 P4CLIENT=ws:rod:tutorial.digital_top:ad5a4926

This .p4config points to the 'sjc-p4commit' that was set in the host field and causes any p4 commands run under the IP directory to use the correct server and client.

Reviewing the P4CONFIG file at the top-level of the Perforce IPLM workspace, we see:

> cat <wsroot>/.p4config P4PORT=aus-p4commit:1666 P4CLIENT=ws:rod:tutorial.tutorial:ad5a4926

The main workspace, .p4config, is pointing to the main Perforce server 'myperforceserver' and any commands run under any other directory in the workspace will use that configuration. More information on the Perforce P4CONFIG system can be found by searching for 'P4CONFIG' on the Perforce documentation site.

All other Perforce IPLM commands will function normally and be transparent to the user.

Using multiple Perforce P4 Servers in a replication (commit-edge/proxy) setup

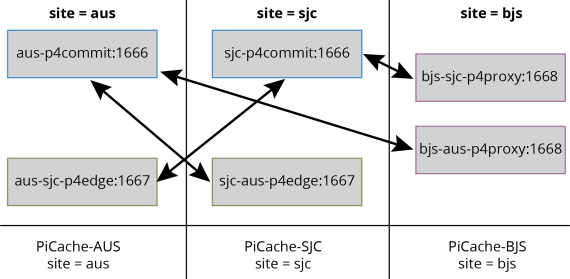

In a Perforce Helix Core replication environment with one or more edge/proxy servers attached to each Commit server a mapping is needed of the fixed commit server setting that is stored in the host field of the IPLM IP to the P4 edge/proxy server that is used at a particular site.

For instance, in a particular site, the local proxy may be configured to communicate with the commit server but only allow a filtered set of data replication with no direct access to the commit server by the users at the site. Since the local user at the site will not have direct access to the commit server, a mapping must be employed for the site between the commit server and the edge/proxy server such that an IP with a host setting to a server with no network route can be loaded into a workspace. In this case, all P4 client commands must be directed to the edge/proxy server.

The IPLM Client needs to know the local site name. The method for determining the local site name is the IPLM Cache configured at each site/LAN with the correct site setting. The alternative method without using PiCache is by setting a client $MDX_SITE env variable. The site string can be any alphanumeric value. The term site can be synonymous with a project if project specific PiCache is configured.

In this example, the server names conform to the following syntax:

commit: location-function:port

replica: from-to-function:port

Requirements:

-

One or more P4 Commit servers

-

Helix Core Edge or Proxy servers at one or more remote site/LAN

-

IPLM IP with host field configured with a Commit Server host:port value

-

IPLM Cache configured at the remote site/LAN with the picache.conf site value that indicates the name of the site/LAN or Client $MDX_SITE env variable

-

IPLM $MDX_CONFIG_DIR/piclient.conf with [RemoteMap x] configured

RemoteMap functionality relies on having either:

-

PiCache configured at each site/LAN with the correct site setting (alphanumeric string)

-

Client $MDX_SITE env variable set (alphanumeric string)

An example (partial) /etc/mdx/picache.conf file with site set to 'aus' is shown here:

[main] # PI cache root directory root = /picache-root # PI cache site site = aus # PI cache server listening port port = 5000

The commit server mapping is done by configuring the IPLM RemoteMap section of the $MDX_CONFIG_DIR/piclient.conf file.

$MDX_CONFIG_DIR/piclient.conf - RemoteMap syntax:

[RemoteMap <identifier_1>]

# commit server

<site> = <host>:<port>

# replica servers

<site> = <host>:<port>

<site> = <host>:<port>

[RemoteMap <identifier_2>]

# commit server

<site> = <host>:<port>

# replica servers

<site> = <host>:<port>

<site> = <host>:<port>

Where:

-

<identifier> is a symbolic name for the region or WAN sub-net (alphanumeric string)

-

<site> is the key and should match the site setting used in the PiCache picache.conf file OR client $MDX_SITE env variable (alphanumeric string)

-

<host>:<port> is the Helix Core p4 commit/replica/edge/proxy server; there should only one entry for each commit server

Example RemoteMap configuration:

... # RemoteMap config section to map a remote Helix Core edge/proxy # to the commit server that is configured in the IPLM IP host field. # # When creating an IPLM workspace, the RemoteMap function will create # a workspace .p4config file that contains the corresponding # IP client (workspace) P4 server mapping. # # Logic: # 1) If the IP has a host field value, # a) the pi client will query the PiCache for the site setting (or $MDX_SITE env var) # b) find the commit server entry in (only) one of the RemoteMap sections # c) dynamically map the IP host value (in the workspace IP directory .p4config) to the # edge/proxy setting corresponding to the site # 2) The pi client will default back to the IP host field value if there # are no RemoteMap section entries correspond to: # a) the IP host value # b) the PiCache site setting (or $MDX_SITE env var) # 3) An error condition will result for an IP if there is no route or map to # a valid commit server. # # Example server names: # commit: location-function:port # replica: from-to-function:port [RemoteMap AUS_P4_COMMIT ] # commit server aus = aus-p4commit:1666 # replica servers sjc = sjc-aus-p4edge:1667 bjs = bjs-aus-p4proxy:1668 [RemoteMap SJC_P4_COMMIT] # commit server sjc = sjc-p4commit:1666 # replica servers aus = aus-sjc-p4edge:1667 bjs = bjs-sjc-p4proxy:1668

Tip: The suggested model contains one RemoteMap section for each Helix Core commit server.

The mapping logic:

-

If the IP has a host field value that differs from the $P4PORT client env variable,

-

The pi client queries the PiCache for the site setting (or $MDX_SITE env var)

-

Finds the commit server entry in (only) one of the RemoteMap sections

-

Writes the .p4config file in the workspace IP directory containing the mapped site edge/proxy server

-

Dynamically maps the IPLM IP host value to the edge/proxy setting corresponding to the site

-

-

The pi client will default back to the IP host field value if there are no RemoteMap section entries corresponding to:

-

The IP host value

-

The PiCache site setting (or $MDX_SITE env var)

-

An error condition will result for an IP if there is no route or map to a valid commit server.

-

This allows any secondary commit/edge servers to be mapped across multiple sites. The primary commit/edge servers can still rely on setting $P4PORT directly in the shell, with a different value set at each site, pointing to the appropriate commit or local edge server.