Hardware for IPLM package installation

Understand the hardware deployment options for package-based installations. Perforce IPLM can be deployed either on its own or on the same machine as a Perforce commit server with replicated edge servers at remote sites. The Perforce IPLM server is heavily threaded and benefits from a high number of cores. The version control system generates significant IO and benefits from high performance local storage for the database, high performance SAN for the versioned files and a 10GbE network connection. The example configurations are divided by how many users are supported and by whether PiServer will be deployed on the same machine as a Perforce server.

For large deployments it is recommended to deploy Perforce IPLM on a separate machine than the DM server.

PiServer performance example

IPLM Server uses an efficient design to reduce hardware load. Much of the scale is achieved using parallelism, which is in turn enabled using multi-core machines. For this reason we recommend a minimum of 8 cores and 32GB memory on the host machine.

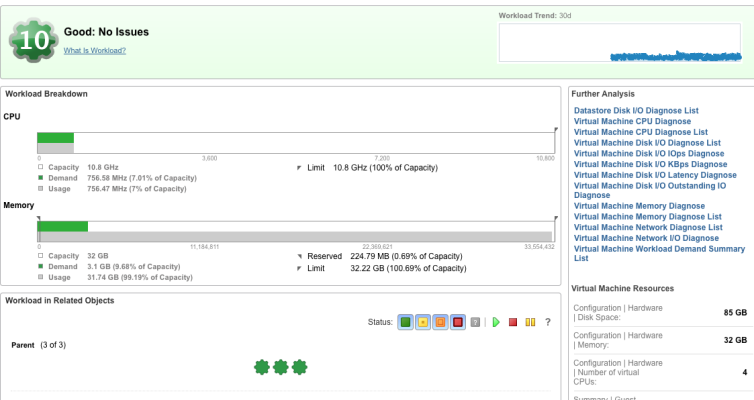

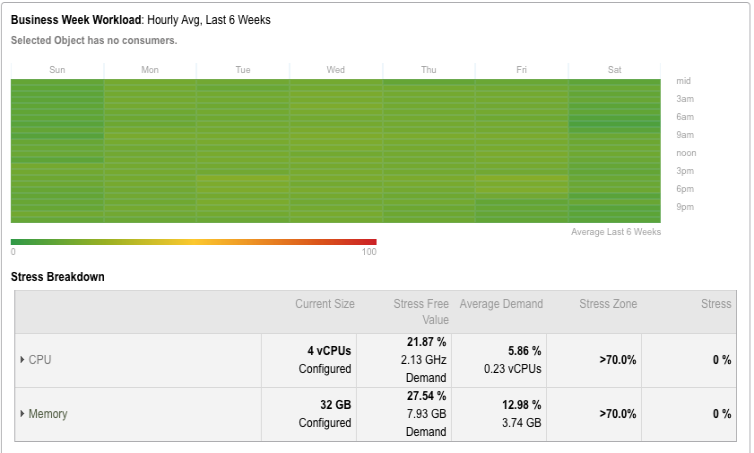

In the below VMware examples we can see that the host machine is very lightly loaded. For reference this customer environment uses 3K IPs, several hundred users consuming anywhere from 2-50GB workspaces

Central Server

Combined Perforce IPLM/Perforce central server. See PiServer Administration page for more information on IPLM Cache. See new Perforce Administration page for more information on Perforce.

Central Server, option 1 (500 users)

| CPU | 16 core Xeon |

|---|---|

| Memory | 64GB |

| Networking | 10GbE preferred / minimum 1GbE |

| Storage | Type | Service | Target |

|---|---|---|---|

| 250GB | Local PCIe NVMe | Perforce IPLM Server | database files |

| 500GB | Local PCIe NVMe | Version Control | database files |

| 1TB | SAN | Version Control | versioned files |

| 2TB | NAS | Perforce IP Cache server | IP Cache files |

Central Server, option 2 (1000 users)

| CPU | 16 core Xeon (dual socket) |

|---|---|

| Memory | 64GB |

| Networking | 10GbE preferred / minimum 1GbE |

| Storage | Type | Service | Target |

|---|---|---|---|

| 500GB | Local PCIe NVMe | Perforce IPLM Server | database files |

| 1TB | Local PCIe NVMe | Version Control | database files |

| 2TB | SAN | Version Control | versioned files |

| 4TB | NAS | Perforce IP Cache server | IP Cache files |

Central Server, option 3 (1500 users)

| CPU | 16 core Xeon (dual socket) |

|---|---|

| Memory | 128GB |

| Networking | 10GbE preferred / minimum 1GbE |

| Storage |

Type |

Service | Target |

|---|---|---|---|

| 1.5TB | Local PCIe NVMe | Version Control | database files |

| 750GB | Local PCIe NVMe | Perforce IPLM Server | database files |

| 3TB | SAN | Version Control | versioned files |

| 6TB | NAS | Perforce IP Cache server | IP Cache files |

Edge Server

Edge server (Perforce only) to serve remote sites, paired with Central Server above.

Edge Server, option 1 (500 users)

| CPU | 16 core Xeon |

|---|---|

| Memory | 32GB |

| Networking | 10GbE recommended / minimum 1GbE |

| Storage | Type | Service | Target |

|---|---|---|---|

| 500GB | Local PCIe NVMe | Version Control | database files |

| 1TB | SAN | Version Control | versioned files |

| 2TB | NAS | Perforce IP Cache server | IP Cache files |

Edge Server, option 2 (1000 users)

| CPU | 16 core Xeon |

|---|---|

| Memory | 32GB |

| Networking | 10GbE preferred / minimum 1GbE |

| Storage | Type | Service | Target |

|---|---|---|---|

| 1TB | Local PCIe NVMe | Version Control | database files |

| 2TB | SAN | Version Control | versioned files |

| 4TB | NAS | Perforce IP Cache server | IP Cache files |

PiServer + Perforce Combo VM (under 100 users)

For small customer deployments of up to 100 users it can be OK to use a single VM for both Perforce and Perforce IPLM services. Note that this assumes a relatively light workload for Perforce, high IO rates can cause PiServer slowness

| 100 users | CPU | 8 core Xeon |

|

|

| Memory | 32GB |

|

|

|

| Networking | 10GbE preferred / minimum 1GbE |

|

|

|

| Storage | 500GB Local PCIe NVMe | Neo4j Service | Local Neo4j db files | |

| 500GB Local PCIe NVMe | Perforce Service | Local Perforce db files* |

*The versioned Perforce files should be kept on separate NFS mounted storage, only the Perforce database files should be kept locally on the VM

Dedicated PiServer VM

Assumes 200 human users with typical interactive work flows, automated-heavy flows (Jenkins based CI) will require more resources and should be discussed with Perforce. See PiServer Administration section for more information on PiServer.

| 200 users | CPU | 8 core Xeon |

|

|

| Memory | 16-24GB |

|

|

|

| Networking | 10GbE preferred / minimum 1GbE |

|

|

|

| Storage | 500GB Local PCIe NVMe | Neo4j Service | Local Neo4j db files | |

|

|

|

|

|

|

| 500 users | CPU | 8-16 core Xeon |

|

|

| Memory | 32GB |

|

|

|

| Networking | 10GbE preferred / minimum 1GbE |

|

|

|

| Storage | 1TB Local PCIe NVMe | Neo4j Service | Local Neo4j db files | |

|

|

|

|

|

|

| 1000+ users | CPU | 16-32 core Xeon |

|

|

| Memory | 64GB |

|

|

|

| Networking | 10GbE preferred / minimum 1GbE |

|

|

|

| Storage | 1TB Local PCIe NVMe | Neo4j Enterprise Service | Local Neo4j db files |

Notes:

- The PiServer machine should use local storage or SAN. NFS mounted storage is not recommended due to performance degradation

- PiServer is often deployed as a Virtual Machine as long as the storage requirements above can be accommodated. Contact Perforce for access

- When using Neo4j Enterprise, the more high-frequency cores and memory, the better. This will be the primary request throughput limitation.

- When DB throughput becomes an issue, please deploy neo4J in an HA cluster; the stack (piserver + DB) scales horizontally.

- When provisioning in the cloud, add 25% compute capacity to make up for the overhead.

IPLM Cache Server (VM)

Suggested hardware for IPLM Cache dedicated server. See IPLM Cache administration for more information on IPLM Cache.

| CPU | 16 core Xeon |

|---|---|

| Memory | 32-64GB |

| Networking | 10GbE recommended / minimum 1GbE |

|

Storage

|

Type

|

Service

|

Target

|

Notes |

|---|---|---|---|---|

| 500GB/1TB |

Local SSD RAID1 |

Service daemons (redis/mongodb/picache) | database files |

|

| 2TB* | NAS |

|

IPLM Cache shared files |

Dependent on the number of IPV's in the system, the amount of data and the effectiveness of deduplication on the filer

|